Key Takeaways:

- AI and Machine Learning enable systems to perform human tasks by identifying patterns and making predictions.

- Types of AI include ANI for specific tasks and AGI (still theoretical), with real-world applications transforming industries like healthcare, cybersecurity, and communication.

- Ethics and Governance are crucial as AI raises concerns about bias, misuse, and transparency, requiring strong oversight.

- The Future of AI promises innovations like automation, but oversight is needed to manage risks like job loss.

Artificial Intelligence (AI) has moved from the pages of science fiction into the fabric of our daily lives. It operates behind the scenes, shaping how we work, communicate, and interact with technology. From the personalized recommendations on streaming services to the virtual assistants that manage our schedules, AI applications are now commonplace. For those new to this powerful technology, the field can appear complex and overwhelming. But what is Artificial Intelligence at its core, how does it function, and what are its real-world implications?

This guide serves as a foundational introduction to AI for beginners. We will demystify essential concepts like machine learning, explore the different types of AI, and discuss the critical ethical considerations that guide its responsible development. You will gain a clear understanding of AI's capabilities and its transformative potential across industries. By the end, you will have a solid framework for navigating the ever-evolving landscape of artificial intelligence and its impact on the modern world.

What Is Artificial Intelligence and How Does It Work?

Artificial Intelligence (AI) refers to computer systems or machines engineered to perform tasks that typically require human intelligence. These tasks include learning from experience, recognizing complex patterns, understanding human language, and making decisions with a high degree of autonomy. At its heart, AI is about creating technology that can perceive, reason, and adapt, moving beyond simple, pre-programmed instructions. A system does not need to be a complex, thinking robot to be considered AI; even a simple algorithm that suggests the next word in your text message is a form of artificial intelligence.

For an academic overview, explore the Stanford AI Index Report. For hands-on training, consider Introduction to Artificial Intelligence (AI).

The engine that powers most modern AI systems is a process called machine learning. Unlike traditional programming where developers write explicit, step-by-step rules for a computer to follow, machine learning allows systems to learn directly from data. This learning process is what enables an AI to improve its accuracy and performance over time without direct human intervention. For instance, imagine teaching a computer to identify a dog in a photo. Instead of trying to program rules for "floppy ears" or "a wagging tail," you would provide the system with thousands of labeled images of dogs. The machine learning model analyzes these examples and learns the distinguishing features on its own.

Build core ML skills in Learning Tree’s Introduction to Machine Learning and review IBM’s Machine Learning Overview for additional context.

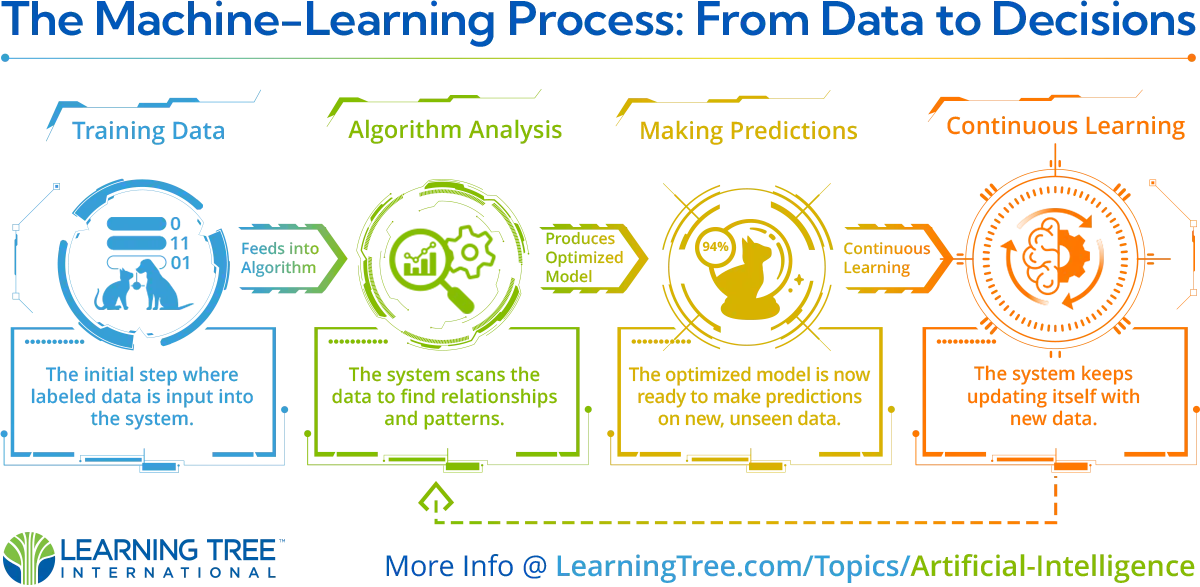

The Machine Learning Process: A Step-by-Step Explanation

Machine learning models are trained, not explicitly coded. This training process generally follows four essential stages, which allow an AI system to adapt and evolve its capabilities. This structured approach ensures that the model can make sense of new information it has never seen before.

Data Collection: The journey begins with gathering vast amounts of data. This "training data" can include images, text, numerical information, or videos. The quality, accuracy, and diversity of this initial dataset are critical, as they form the foundation of the model's knowledge and will directly influence its future performance.

Pattern Recognition: The machine learning algorithm processes this data to identify underlying patterns, relationships, and important features. In a dataset of customer purchase histories, for example, an algorithm might learn to spot patterns that indicate which products are frequently bought together, enabling more effective recommendations.

Model Optimization: As the algorithm identifies patterns, it continuously adjusts its internal parameters to improve its accuracy. This is an iterative cycle of testing and refinement. The model's predictions are compared against known outcomes in the data, and it tunes itself to minimize errors and produce more reliable results.

Deployment and Prediction: Once the model is sufficiently trained and optimized, it is deployed to make predictions or decisions on new, unseen data. A well-designed model does not stop learning at this stage; it continues to learn from new inputs, further enhancing its capabilities throughout its operational lifecycle.

Types of AI: Narrow vs. General Intelligence

Artificial Intelligence is often categorized into two primary types based on its capabilities: Artificial Narrow Intelligence (ANI) and Artificial General Intelligence (AGI). Understanding this distinction is key to grasping where AI technology stands today and where it might be headed in the future. It helps separate the practical applications we use now from the more speculative concepts explored by researchers.

Artificial Narrow Intelligence (ANI): Also known as Weak AI, ANI is designed to perform a single task or a narrow range of related tasks. This is the type of AI we interact with every day. Think of the AI that powers your GPS for navigation, the algorithms that filter spam from your email, or the facial recognition feature on your smartphone. While these systems are incredibly efficient in their specific domains, they cannot perform tasks outside of their designated function. An AI trained to play chess cannot suddenly offer medical advice.

Artificial General Intelligence (AGI): Also known as Strong AI, AGI refers to a hypothetical form of AI that possesses human-like intelligence and cognitive abilities. An AGI system would be able to understand, learn, and apply its knowledge across a wide range of different domains, much like a human being. It could solve novel problems, think abstractly, and adapt to unfamiliar situations without needing specific training for each new task. AGI remains theoretical and is a long-term goal for many in the AI research community.

Real-World Applications of AI Across Industries

AI is not a distant future promise; it is a present-day reality that delivers significant value across nearly every sector. Its ability to analyze data at an immense scale, automate complex processes, and provide predictive insights has made it an indispensable tool for innovation and efficiency. Here are a few everyday examples that show how AI applications are integrated into our lives:

Healthcare: In the medical field, AI systems are revolutionizing diagnostics and treatment. Machine learning models analyze medical images like X-rays and MRIs to help doctors detect diseases such as cancer earlier and with greater accuracy. AI also powers predictive models that can identify patients at high risk for certain conditions, enabling proactive care and personalized treatment plans that improve patient outcomes.

For perspectives on AI in healthcare, see HBR on GenAI in Healthcare.

Cybersecurity: Machine learning models are on the front lines of modern digital defense. These systems continuously monitor network traffic in real time to detect and respond to potential threats. They excel at identifying anomalous patterns that might indicate a sophisticated cyberattack, allowing organizations to defend against breaches before they can cause significant damage and protecting sensitive data from unauthorized access.

Learn more in our blog, Navigating the Future of Cybersecurity, and explore NIST’s AI Risk Management Framework.

Communication: Have you ever used a customer service chatbot or a virtual assistant like Siri or Alexa? These tools rely on Natural Language Processing (NLP), a branch of AI that enables machines to understand, interpret, and generate human language. NLP makes our interactions with technology more conversational and intuitive, bridging the gap between human language and computer understanding.

For implementation strategies, watch Unlocking AI: Practical Strategies for Implementation and review Google’s ML Glossary entry on NLP.

Data Analysis: Modern businesses collect enormous volumes of data from websites, sales, and customer interactions. AI-powered analytics tools can sift through this data to uncover valuable insights, trends, and predictions that would be impossible for a human analyst to find. This helps organizations make smarter, data-driven decisions about everything from product development and supply chain optimization to targeted marketing strategies.

See also McKinsey’s State of AI 2025 for data on enterprise adoption.

Addressing the Ethical Challenges in AI Development

As AI becomes more powerful and integrated into society, its development raises important ethical questions that require careful consideration. Ensuring that AI is developed and used responsibly is critical to harnessing its benefits while mitigating potential harm. The main concerns revolve around fairness, transparency, and security, which developers and policymakers must address together.

For governance frameworks, consult OECD AI Principles and the EU’s AI Act.

Common Ethical Concerns in AI

Inaccuracies in AI Models: An AI system learns from the data it is given. If that data reflects existing societal weights, the AI will learn and perpetuate those same out dated weights. For example, an AI hiring tool trained primarily on historical data from a male-dominated industry might unfairly penalize qualified female candidates. This creates a significant risk of excluding well-qualified candidates who should get a role based on merit.

Explore mitigation strategies: WEF on addressing bias in AI.

Lack of Transparency (The "Black Box" Problem): Many advanced AI models, particularly in deep learning, operate as "black boxes." This means that even their creators cannot fully explain how the model arrived at a specific decision or recommendation. This lack of transparency is highly problematic in critical applications like loan approvals, criminal justice, or medical diagnoses, where understanding the reasoning behind a decision is essential for accountability and trust.

Learn more about explainability: IBM’s Explainable AI resources.

Security and Misuse: The same capabilities that make AI a powerful tool for good can also be exploited for malicious purposes. AI can be used to create more sophisticated cyberattacks, generate convincing fake news and disinformation (deepfakes), or develop autonomous weapons systems. These potential misuses pose significant security risks to individuals and society at large, demanding robust governance and oversight.

See guidance in NIST’s AI RMF Playbook.

AI Glossary: Key Terms to Know

Model Collapse: A phenomenon where a machine learning model's performance degrades because it begins training on data generated by other AI models instead of original, human-created data. Over successive training cycles, the data becomes a less accurate representation of reality, similar to making a copy of a copy until the image becomes blurry and unrecognizable.

Model Drift: This occurs when an AI model's predictive accuracy declines over time because the statistical properties of the real-world data it processes have changed. The relationships between variables that the model learned during its initial training no longer hold true. For example, an AI model trained to predict consumer buying habits may become inaccurate after a major economic shift alters people's spending behaviors.

Overfitting: Overfitting happens when an AI model is too closely tailored to its training data, capturing noise and random fluctuations rather than underlying patterns. While it performs exceptionally well on training data, it struggles to generalize to new, unseen data. This can result in poor performance during real-world applications.

Feature Engineering: Feature engineering is the process of selecting, transforming, or creating input variables (features) that improve the performance of a machine learning model. Effective feature engineering can lead to better accuracy, as it helps the model focus on the most relevant aspects of the data.

Transfer Learning: Transfer learning is a technique where a pre-trained model, originally developed for one task, is adapted to perform a different but related task. This approach can significantly reduce the amount of data and computation needed for training, making it a popular choice for applications like image recognition and natural language processing.

The Future of Artificial Intelligence

The field of AI is evolving at an incredible pace, with breakthroughs happening continuously. The future promises even more sophisticated applications that will further reshape our world. Two key areas of focus are personalization and automation. Advancements in these areas will bring unprecedented opportunities, but they also come with risks that require careful planning and proactive management from businesses and governments alike.

Future Opportunities:

Hyper-Personalization: AI will enable deeply personalized experiences in areas like education and healthcare. Imagine adaptive learning platforms that tailor coursework to each student's unique learning style and pace. In medicine, we will see personalized treatments designed based on an individual's genetic makeup and lifestyle, leading to more effective healthcare.

Advanced Automation: Automation will extend far beyond simple, repetitive tasks in factories. AI-driven systems will manage complex logistics, optimize entire global supply chains in real time, and even automate aspects of scientific research, accelerating discovery and leading to major gains in efficiency and innovation across all industries.

For applied training, see AI Strategies for Project Managers.

Risks to Anticipate:

Job Displacement: As AI-powered automation becomes more capable, it will likely disrupt many traditional job roles across various sectors. This creates a societal challenge to upskill and reskill the workforce, ensuring that individuals can transition to new roles that collaborate with AI systems rather than being replaced by them.

See evidence in WEF’s Future of Jobs 2025 and consider Learning Tree’s Top AI Courses.

Ethical and Societal Dilemmas: The continued advancement of AI will intensify ethical debates around privacy, autonomy, and control. Establishing robust governance frameworks, clear regulations, and universal ethical guidelines will be essential to ensure that AI development proceeds in a way that benefits humanity as a whole.

Frequently Asked Questions (FAQ)

What is the main difference between AI and machine learning?

Artificial Intelligence is the broad scientific field focused on creating intelligent machines. Machine learning is a specific subset of AI that involves training systems to learn patterns from data without being explicitly programmed. Think of AI as the overall goal and machine learning as one of the primary methods for achieving it.

Is AI dangerous?

AI itself is a tool; its impact—positive or negative—depends on how it is designed and used. While there are valid risks related to bias, security, and job displacement, these can be managed through responsible development practices, strong ethical guidelines, and proactive regulation from governments and industry bodies.

Can I learn AI without a programming background?

Yes. While programming is a key skill for developing new AI systems, many resources and introductory courses focus on the core concepts of AI without requiring deep coding knowledge. Understanding the principles of AI, its applications, and its limitations is a valuable skill for professionals in any field, not just technology.

Begin your AI learning journey today with Learning Tree’s AI Training Catalog and AI Workforce Solutions. Equip yourself with the tools and knowledge to succeed in an AI-driven world.